Coding music

As easy as Pi

Presented by Xavier Riley / @xavriley

@xavriley

works at: @opencorporates

slides: sonic-pi-talk.herokuapp.com

github: github.com/xavriley

What's all this about?

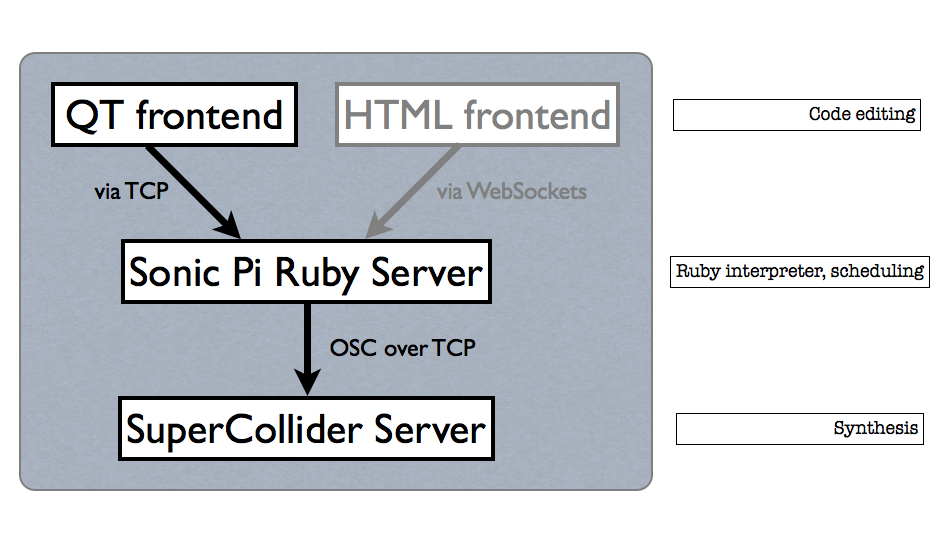

A new cross-platform music programming environment that uses the Ruby programming language. It aims to teach concepts of computer science through creating music.

Where will it work?

It currently runs on Mac OSX (back to 10.6) and linux and is distributed with the Raspberry Pi.

Windows support is planned - volunteers wanted!

Download the latest version here: http://sonic-pi.net/

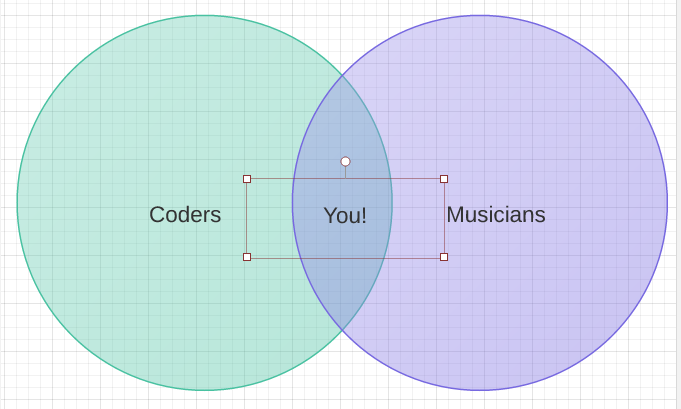

Who is this for?

Please ask questions!

Overview

- Overture: a love story in 3 acts

- Making music: A quick tutorial

- Under the hood: How it works

- Coda: so what?

Overture: a love story in 3 acts

My personal coding journey

Act 1: Interactive Music with Creative Computing

Act 2: Becoming a professional programmer

Act 3: Coming back to music

Making Music

The four esses

- sampling

- synthesis

- effects

- sequencing

- (and for bonus points)

- stochasticism

- Algorave

But first... Using the built in tutorial and docs

Since I proposed this talk, Sonic Pi has shipped with great documentation and an extensive tutorial full of examples. You should check that out first - what follows is a quick run through.

Sampling

sample :perc_bell

sleep 5

sample :drum_cymbal_open, rate: -1

sleep 5

sample :drum_cymbal_open, rate: 0.5

sleep 5

sample :loop_amen, start: 0.5, finish: 1

sleep 5

3.times do

sample :loop_amen

sleep(sample_duration(:loop_amen))

end

Sampling (advanced)

use_debug false

steps = (0..1).step(1/16.0).each_cons(2).to_a

steps.shuffle! # randomise the order

puts steps.inspect

4.times do

steps.each do |s, f|

sample :loop_amen, start: s, finish: f

sleep(sample_duration(:loop_amen)/16.0)

end

end

Sampling

- you can load your own samples too

- any .WAV file can be loaded

Synthesis

play :c4

sleep 3

play :c4, sustain: 2

sleep 5

# Sonic Pi comes with 23 different synth sounds

with_synth :dsaw do

play :c4, sustain: 2, amp: 0.3, attack: 1

end

Synthesis (advanced)

time, synths, n, wait_time = 10, [], 30, 4

rand_note = lambda { rrand(note(:A2), note(:A4)) }

use_synth :dsaw

n.times do

# collect the synths in an array for controlling later

synths << play(rand_note.call, detune: rrand(0,1),

detune_slide: time, sustain: time,

amp: (1.0/n), pan: rrand(0, 1))

end

sleep wait_time

synths.each do |t|

slide_time = rrand(wait_time, (time - 2))

t.control note: (one_in(2) ? 70 : 46), note_slide: slide_time, detune: 0.1

end

Synthesis

- you can load your own "synthdefs"

- these can be created in Overtone or Supercollider

Effects

use_bpm 120

use_synth :dsaw

notes = [:a1,:a2,:g1,:g1,:g1,:g2,:e1,:e2,nil,:f1,nil,:g1,nil]

durations = [2,2,0.66,0.66,0.66,2,2,1,1,1,1,1,1]

play_pattern_timed(notes, durations)

with_fx :slicer, pulse_width: 0.25 do

with_fx :wobble, mix: 0.5, res: 0.3, phase: 0.25 do

with_fx :distortion, distort: 0.99 do

play_pattern_timed(notes, durations)

end

end

end

Effects

- Lots more to be done here!

Sequencing

# set bpm to the value of the :loop_amen sample

use_bpm (60 / (sample_duration(:loop_amen)/4))

in_thread(name: :master) do

loop do

3.times do

cue :play_backbeat

sleep 4

end

1.times do

cue :play_fill

sleep 4

end

end

end

in_thread(name: :beats) do

loop do

sync :play_backbeat

sample :loop_amen

end

end

in_thread(name: :fills) do

use_debug false

loop do

sync :play_fill

16.times { sample :loop_amen, start: 0.5, finish: 0.75; sleep 0.25 }

end

end

Stochasticism

(or randomness)

use_random_seed(3)

puts one_in(6) # returns boolean

puts dice(6) # return int between 1 and 6

print rrand(0, 10) # "ranged" random float

# Get the current seed

puts (Thread.current.thread_variable_get :sonic_pi_spider_random_generator).seed

Stochasticism (advanced)

use_bpm 120

define :map_to_beats do |index_range|

index_range = Array(index_range)

if (index_range.length % 4 == 0)

onbeats = index_range.each_slice(4).map(&:first)

index_range.map {|i|

@current_beat = (onbeats.index(i) + 1) if (i % 4 == 1)

{

beat: @current_beat,

subdiv: ((i - 1) % 4),

onbeat: i.odd?,

beat_index: i

}

}

end

end

define :randobeat do

# by playing with the "length" of onbeats vs offbeats

# we get different groove feelings

sleep_times = [0.25, 0.25]

sleep_times = [0.23, 0.27] if one_in(4)

sleep_times = [0.27, 0.23] if one_in(8)

map_to_beats(1..16).each do |b|

i = b[:beat_index]

sample :drum_bass_hard if (b[:beat] == 1 && b[:subdiv] == 0)

with_fx :reverb, mix: 0.01 do

sample :elec_blip if b[:beat] == 3 and b[:subdiv] == 0

end

if b[:subdiv] == 0

# strong beats

sample :drum_cymbal_closed

elsif one_in(3) and b[:subdiv] == 2

# offbeat 8ths

sample :drum_cymbal_closed

sample :drum_bass_hard if one_in(6)

elsif one_in(5)

# offbeat 16ths

sample :drum_cymbal_closed

sample :drum_bass_hard if one_in(6)

end

sleep sleep_times.first if i.odd?

sleep sleep_times.last if i.even?

end

end

in_thread(name: :beat) do

loop { randobeat }

end

Algorave

Honourable mentions

- Live coding is a real thing!

- Grew out of academic work on music programming.

- Check out toplap.org

Algorave

Meta-eX, Yaxu, Repl-electric

https://github.com/meta-ex/ignite (source of that performance)

Algorave

Meta-eX, Yaxu, Repl-electric

Algorave

Meta-eX, Yaxu, Repl-electric

Repl Electric - The Stars from Repl Electric on Vimeo.

Under the hood

How it works

Making music imperative

sleep is doing a lot more than you think

Truly honoured to have worked with @dorchard on this FARM '14 paper "A temporal semantics for a live coding language" http://t.co/sU2xxOUTL5

— Sam Aaron (@samaaron) July 3, 2014AFAIK SuperCollider has better scheduling support than HTML5 WebAudio (for now)

sleep explained

One of the motivations for Sonic Pi was to model music in a way kids could understand

The other was the target of the Raspberry Pi which is not exactly high powered

Using sleep feels a bit more like programming BASIC which is easier to grasp for beginners

For performance, Sonic Pi inserts a tiny gap at the start of each run, and which gives it just enough time to schedule the events

It fails gracefully if it can't schedule the event in time because, in music, missing a beat is better than playing it out of time

Read the paper linked above for a full explanation

Coda

- Music as data

- Rethinking the digital music stack

- Code vs. DAWs

Music as data, music as text - so much left to explore here

Here's a gist with a comment, trading some auto generating dubstepThere's still no great DSL/text representation for melodic music - an interesting challenge for someone!

Sonic Pi saves each workspace as a git repo! ~/.sonic-pi/store/default

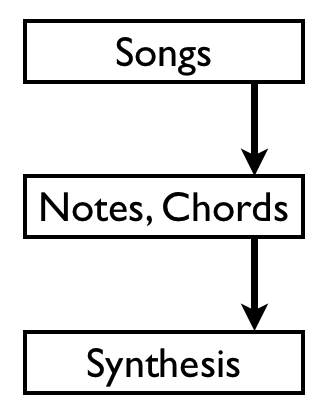

The digital music stack

Are we programming at the right level of abstraction?

For example

From the SuperCollider (SCLang) tutorial

(

{ // Open the Function

SinOsc.ar( // Make an audio rate SinOsc

440, // frequency of 440 Hz, or the tuning A

0, // initial phase of 0, or the beginning of the cycle

0.2) // mul of 0.2

}.play; // close the Function and call 'play' on it

)

There's a lot of detail here

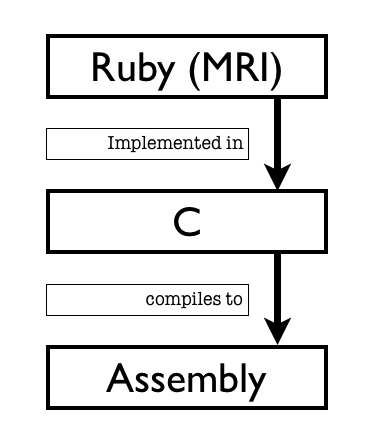

SC Lang is effectively recreating the world of analog modular synthesizers in code (with 'oscillator' functions that plug into other 'oscillator' functions).

It excels at synth and sound design, but its a complex medium to sequence and arrange music.

Sonic Pi leverages the synth designs but draws a boundary. You can't create these oscillating functions directly.

It's a separation of concerns. There's a theoretical loss in the power you have, but it's also liberating not to have to worry about it. (think memory management in C vs. GC in Ruby)

(see refs: "Design, Composition and Performance")

Aside: Research (Hirsch - Cultural Literacy) has shown that a reader needs to understand 95% of the words in a text to have a chance of understanding it. Does the same apply to computing paradigms and syntax?

Code vs. DAWs

“The other thing that's interesting is that for each of these knobs has a corresponding jack. In other words there a human interface and a machine interface to the same things. The machine interfaces were there all the time, in fact they were first, and then the human interfaces come along. We have to remember that as a design thing, right? What's wrong with SQL? (pause) What's wrong with SQL is that there's no machine interface to SQL. They only designed a human interface and we've suffered ever since having to send these strings around. You can always build a human interface on top of a machine interface. The other way round is often disgusting.”Rich Hickey - Design, Composition and Performance

| Sonic Pi | Ableton Live |

|---|---|

| Machine Interface | Human Interface |

| Free | €998 (on sale) |

| Expressivity | Interfaces |

| Coding | "Music Technology" |

Of course I'm trolling here (a little bit).

Boundaries are blurry here also: Ableton has MaxMSP and a subset of Python, Renoise has Lua scripting

An analogy: I think Excel is an incredible tool but it makes some things impossible (e.g. you couldn't build OpenCorporates in MS Access). Think of the music that could only be made in code.

A concrete example

The day after I wrote this, I saw this piece on programming Reddit

Launching One Clip at a Time in Ableton Live 9 with the APC40 Using Python MIDI Remote ScriptsIt's over 1000 words. Do you still think Hickey's choice of words was too strong?

To finish: a piece that couldn't be written in Ableton

# Transcribed from the SC140 project: http://ia600406.us.archive.org/16/items/sc140/sc140_sourcecode.txt

# f={|t|Pbind(\note,Pseq([-1,1,6,8,9,1,-1,8,6,1,9,8]+5,319),\dur,t)};Ptpar([0,f.(1/6),12,f.(0.1672)],1).play

# Orig. code by Batuhan Bozkurt (refactored by Charles Celeste Hutchins)

# Steve Reich style phasing

# Pan added by me

note_seq = [-1,1,6,8,9,1,-1,8,6,1,9,8]

note = ->(x) { play (63 + x), release: 0.2,

pan: ->{rrand(0.4, 0.6)}, decay: 0.01,

attack: 0.005, amp: 0.4 }

in_thread { loop { note_seq.each {|n| note.call(n); sleep (1.0/6) } } }

in_thread { loop { note_seq.each {|n| note.call(n); sleep 0.1672 } } }

I'd like to thank...

- Kyan for being awesome

- Sam Aaron for writing Sonic Pi and answering all my questions

- Scott Wilson + Birmingham Uni for starting me on the path

- My wife for putting up with me whilst I wrote this

Thanks!

“Ah, music,” he said, wiping his eyes. “A magic far beyond all we do here!”Albus Dumbledore

References

Image credits: http://cdn.synthtopia.com/wp-content/uploads/2010/07/modular-synthgasm.jpg

http://missrosen.files.wordpress.com/2011/11/oup-424221chandlerthebigsleep.jpg

http://cps-static.rovicorp.com/3/JPG_400/MI0003/284/MI0003284914.jpg?partner=allrovi.com

Big old list of bookmarks from research

http://www.earslap.com/instruction/recreating-the-thx-deep-note

http://www.earslap.com/instruction/running-baudline-on-mac-os-x

http://magpi.techjeeper.com/The-MagPi-issue-23-en.pdf

https://gist.github.com/urbanautomaton/11ce2acb775d1bc24898

https://gist.github.com/aaron-santos/3651346

https://github.com/philandstuff/ldnclj-dojo-2014-05/blob/master/src/ldnclj_dojo_2014_05/core.clj

https://www.youtube.com/watch?v=ghDpcGuUUm4

http://sonic-pi.net/get-v2.0

http://www.fredrikolofsson.com/pages/code-sc.html

file://localhost/Users/xavierriley/Downloads/f0plugins36/Atari2600/Atari2600.html

http://supercollider.sourceforge.net/audiocode-examples/

http://www.mmlshare.com/tracks/view/403

http://www.mmlshare.com/tracks/view/378

http://home.ieis.tue.nl/dhermes/lectures/soundperception/htmlfiles/fmFm.html

http://www.michaelbromley.co.uk/blog/105/what-makes-a-good-tech-talk

http://pastebin.com/uYvrqANQ

http://www.reddit.com/r/chiptunes/

http://gibber.mat.ucsb.edu/

http://diydsp.com/livesite/

https://github.com/stagas/audio-process

http://studio.substack.net/wavepot

https://watilde.github.io/beeplay/

http://blog.chrislowis.co.uk/waw/2014/06/14/web-audio-weekly-19.html

http://www.openscad.org/

http://musicforgeeksandnerds.com/

http://toplap.org/

http://wavepot.com/

http://www.acm.org/search?SearchableText=music

https://soundcloud.com/repl-electric/sets/live-coding

http://www.mcld.co.uk/blog/blog.php?235

http://www.generalguitargadgets.com/effects-projects/distortion/rat/

http://superdupercollider.blogspot.co.uk/2009/05/crushing-bits-for-fun-and-profit.html

https://github.com/overtone/overtone/blob/master/src/overtone/studio/fx.clj

https://en.wikipedia.org/wiki/Chiptune

http://www.nullsleep.com/treasure/mck_guide/

https://en.wikipedia.org/wiki/Music_Macro_Language

http://www.mariopiano.com/mario-sheet-music-overworld-main-theme.html

http://elinux.org/RPi_Text_to_Speech_(Speech_Synthesis)

http://new-supercollider-mailing-lists-forums-use-these.2681727.n2.nabble.com/Text-To-speech-td4520252.html

http://tm.durusau.net/?p=54881&utm_source=dlvr.it&utm_medium=twitter

http://www.infoq.com/presentations/programming-language-communication

https://www.facebook.com/media/set/?set=a.691766514210545.1073741881.109367642450438&type=1

http://slidedeck.io/about

http://toplap.org/overtone/

http://hg.postspectacular.com/resonate-2013/src/0cbf14634d51/src/resonate2013/?at=default

https://www.ableton.com/en/trial/

https://en.wikibooks.org/wiki/Sound_Synthesis_Theory

http://daveyarwood.github.io/music/2014/08/23/introducing-riffmuse/?utm_source=dlvr.it&utm_medium=twitter

http://www.renoise.com/products/renoise

http://thelackthereof.org/wiki.rss/Overtone_Adventures

http://supercollider.calumgunn.com/

http://toplap.org/tidal/

http://www.creativefront.org/projects-and-events/events/thesummit

http://www.sussex.ac.uk/Users/nc81/modules/cm1/workshop.html

http://www.sussex.ac.uk/Users/nc81/modules/cm1/scfiles/11.1%20Physical%20Modelling.html

http://www.sussex.ac.uk/Users/nc81/modules/cm1/scfiles/11.2%20Analogue%20Modelling.html

http://cambridge105.fm/podcasts/105-drive-09-09-2014/?utm_source=dlvr.it&utm_medium=twitter

http://webmagazin.de/en/Whats-it-like-to-work-for-Ableton-173156

https://www.youtube.com/watch?v=TePRrmX9g3U

http://www.infoq.com/presentations/Design-Composition-Performance

http://www.kylegann.com/histune.html

https://github.com/TONEnoTONE/Tone.js

https://skillsmatter.com/skillscasts/5511-a-gentle-introduction-to-music-theory-in-ruby